INTRODUCTION

Liu Shen’s Artificial Intelligence (AI) Team was established in June 2017 and comprised of attorneys who have strong technical background in computer science or related technical fields and have rich experiences in IP protection. Since its establishment, the Team has been focusing on the study of AI industry development, governmental policy for supporting and regulating of AI researches and applications, laws and regulations as well as practices in Intellectual Property (IP) protection of AI, etc., in China and some other countries and regions that are active in AI field. The research results of Liu Shen’s AI Team include special reports of “Patent Protection of Intellectual Property (2018)” and “Protecting Artificial Intelligence Patents (2019),” collection of featured articles “Intellectual Property Protection of Artificial Intelligence in China,” and some other articles & presentations about IP protection of AI in China and other countries/regions.

This report on “Intellectual Property Protection of Artificial Intellectual Property” (2021) is a research report contributed by LiuShen’s AI Team, mainly about intellectual property protection of AI in China and some other countries/district including the United States, Europe, Japan and South Korea. The report aims to help relevant companies and IP practitioners to understand the overall development of AI industry and various aspects of AI-related IP protection.

01

Chapter I

Overview of AI Technology

1.1 Birth and development of Artificial Intelligence

If the birth of the discipline of modern artificial intelligence is to be marked with the earliest appearance of the term “Artificial Intelligence (AI)”, it should be in Dartmouth Artificial Intelligence Conference initiated in 1955 and held in 1956. It is generally accepted that AI was formally proposed by John McCarthy, convener of the conference.[1] This conference aimed at calling like-minded people together to discuss “AI”. In the proposal of this conference, McCarthy et al. said that “We propose that a 2-month, 10-man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.[2]” This conference lasted for a month, basically focusing on a large-scale brainstorming. This gave birth to the AI revolution known to all.

In 2006, the parties to this conference reunited in Dartmouth

From left: Moore, McCarthy, Minsky, Selfridge, Solomonoff

After the birth of AI, it once went into a trough. During the period from 1970s to1980s, the development of AI fell into “a cold winter” in the 1970s due to the inability to complete large-scale data and complex tasks and the failure to break through the computing power. In 1980, the XCON developed by Carnegie Mellon University (CMU) was officially put into use, which became a milestone in a new era, since the expert system has began to exert its power in specific fields, leading the entire AI technology toward prosperity.

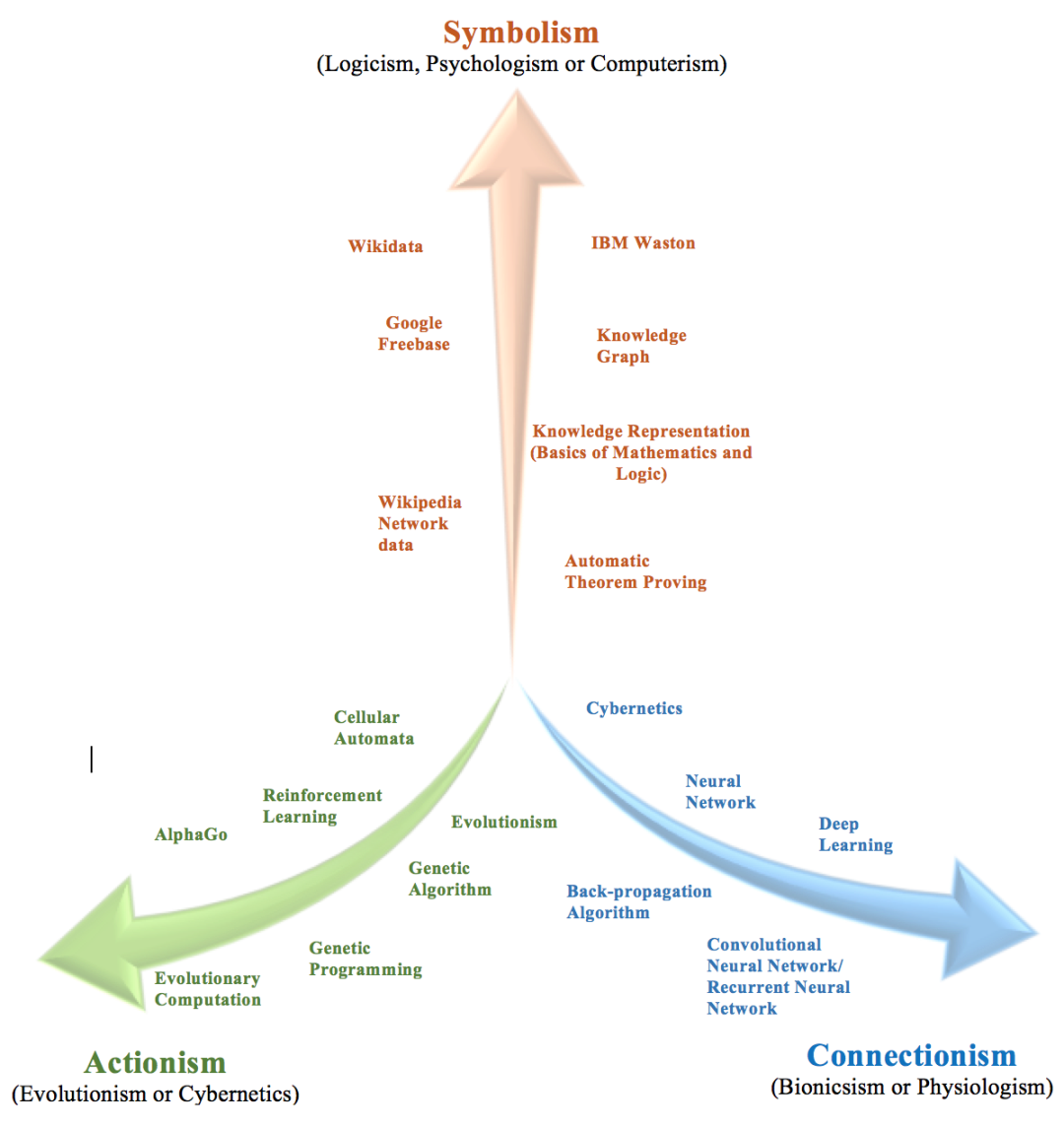

After more than half a century of development, numerous far-reaching technologies, scholars, companies and products have emerged in the filed of AI, deriving many different schools. Currently, there are three main AI schools:

(1) Symbolism, also known as Logicism, Psychologism or Computerism, with its main principle being the hypothesis of the physical symbol system (i.e., the symbolic operating system) and the principle of limited rationality.

(2) Connectionism, also known as Bionicsism or Physiologism, with its main principle being the neural network and the connection mechanism and learning algorithm among neural networks.

(3) Actionism, also known as Evolutionism or Cybernetics, with its principle being cybernetics and “perception-action” control system. Actionism held that action is a combination of various body responses of an organism to environmental changes, and its theoretical goal is to foresee and control actions.

Figure 1.1-1

Symbolism reduces all information to operational symbols. Like mathematicians, in order to solve equations, original expressions will be replaced with other expressions. Nowadays, most AIs are based on symbolism. In the industrial age, this school has gained a lot of limelight since it is the easiest for the standardized process to use the AI design of the symbolism school. One of the most representative examples of symbolism is IBM’s Deep Blue, which defeated human chess champions and made humans realize the awful development of AI for the first time.

Symbolism has been thriving for a long time, and has made important contributions to the development of AI, especially to the successful development and application of expert systems. It is of great significance for AI to move toward engineering applications and realize the integration of theory with practice. Later, people discovered that symbolism also has shortcomings. Decision-making requires knowledge. A lot of intuition related knowledge cannot be expressed easily with symbolic reasoning, but require computers to learn from data.

In 1986, the American psychologist David Rumelhart et al. proposed back-propagation algorithm in a multi-layer network (also known as the reverse mode of automatic differentiation). Since then, connectionism witnessed a great momentum of development from model to algorithm, from theoretical analysis to engineering realization, laying a foundation for neural network computing to enter the market.

Now, Yoshua Bengio, a Canadian computer scientist, has led a team to revitalize the symbolic AI with deep learning, which is miraculous.

Actionists believe that learning is the link between stimulus and response, and basically assumed action is a learner’s response to environmental stimuli. Learning is a gradual process of trial and error, while reinforcement is a key to successful learning. In terms of the actionism, we can see that in the model of deep learning, robot interaction is an approach of actionism in reality, which constantly receives feedback for evolving in learning. One of the most representative examples of actionism is Google’s DeepMind intelligent system AlphaGo, which defeated Lee Sedol, the world Go champion and South Korean 9-dan professional Go player by taking a 4-1 lead in March 2016, making a sensation all over the world. From May 23 to 27, 2017, AlphaGo scored a 3:0 victory over Ke Jie, a Chinese Go player ranking 1st in the world, in three rounds of “Man-machine War 2.0”.

Deep learning and deep neural networks, which witness the strongest development momentum and gain the most limelight at present, belong to connectionism, while the equally popular knowledge graph and the important expert system in the second industry wave of the last century belong to symbolism; the contribution of actionism is mainly in the robot control system.

In the second decade of the 21st century, with the explosion of mobile Internet, big data, cloud computing, and IoT technologies, AI technology has also entered a new era of convergence. From AlphaGo’s victory over Lee Sedol to Microsoft’s speech recognition technology surpassing humans, Google’s autonomous driving, Boston Dynamics robots, smart speakers throughout the market, neural network chips and intelligent applications in everyone’s mobile phones, AI has developed from an invisible thing into a tangible accompaniment to everyone’s production and life, and the beautiful picture once described by previpus scientists more than half a century ago is being realized step by step by AI technology.

[1] McCarthy recalled in his later years that he heard the word “Artificial Intelligence” from others, which means “AI” was not his original. AI is derived from Machine Intelligence, which was first proposed by Turing in the Intelligent Machinery, an internal report of the National Physical Laboratory (NPL). According to Wikipedia, these two words are synonymous.

[2] See Dartmouth AI Proposal , J. McCarthy et.al, August 31, 1955.

1.2 Definition of AI and current status of technology

1.2.1 Definition of Artificial Intelligence

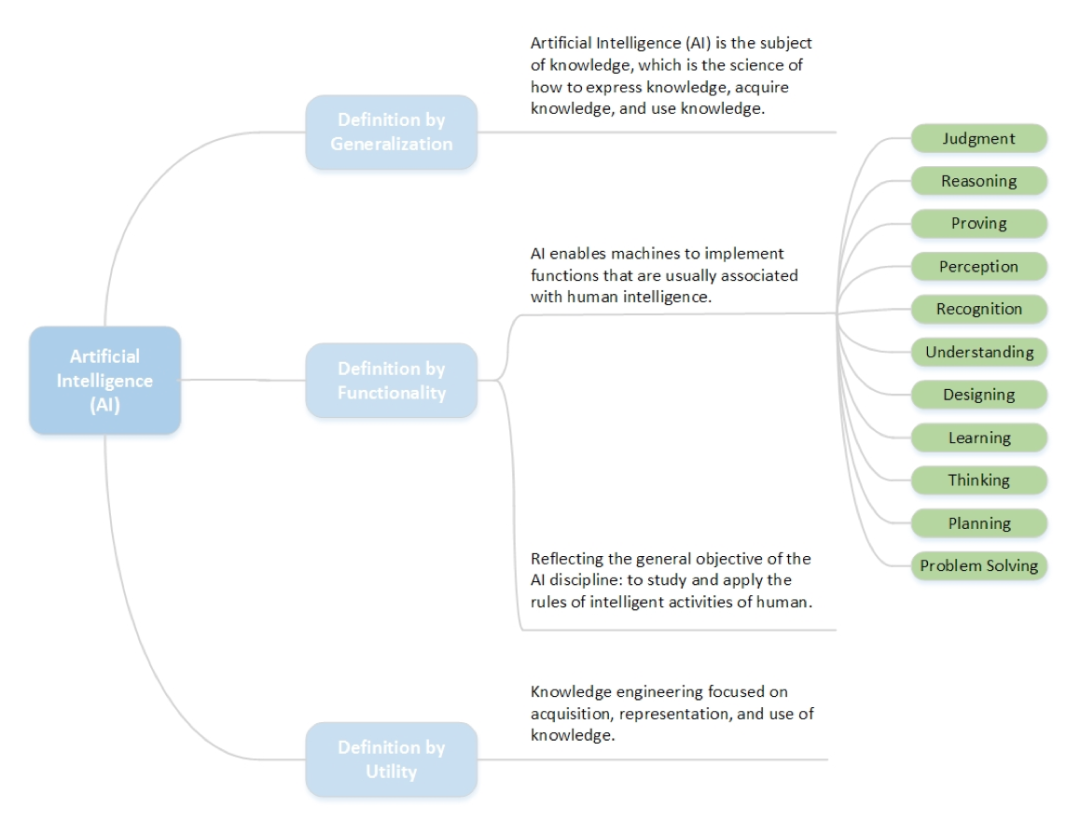

Generally speaking, Artificial Intelligence (AI) is the subject of knowledge, which is the science of how to express knowledge, acquire knowledge, and use knowledge. In terms of functionality, Artificial Intelligence (AI) enables machine to implement functions that are usually associated with human intelligence, including mental activities such as judgment, reasoning, proving, perception, recognition, understanding, designing, learning, thinking, planning and problem solving. These reflect the general objective of the AI discipline: to study and to apply the rules of intelligent activities of human. From the perspective of utility, AI is knowledge engineering focused on acquisition, representation, and use of knowledge. As shown in the following table.

Figure 1.2.1-1

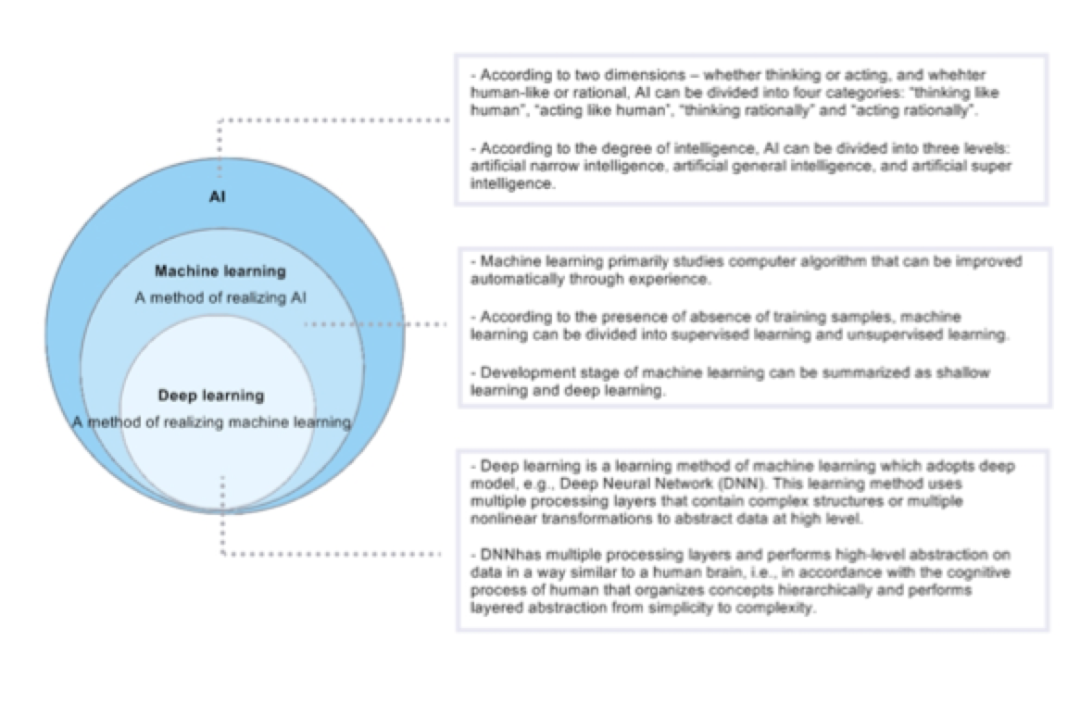

In the AI field, three technology concepts are used most frequently: machine learning, deep learning and neural network. Generally speaking, machine learning is a method of realizing artificial intelligence; and deep learning is a method of realizing machine learning through a combination of deep neural network (DNN) and learning algorithm. The following figure gives a simple introduction to the three concepts and illustrates their relationship with AI.

Figure 1.2.1-2

Figure 1.2.1-2

1.2.2 Specialized Systems of AI

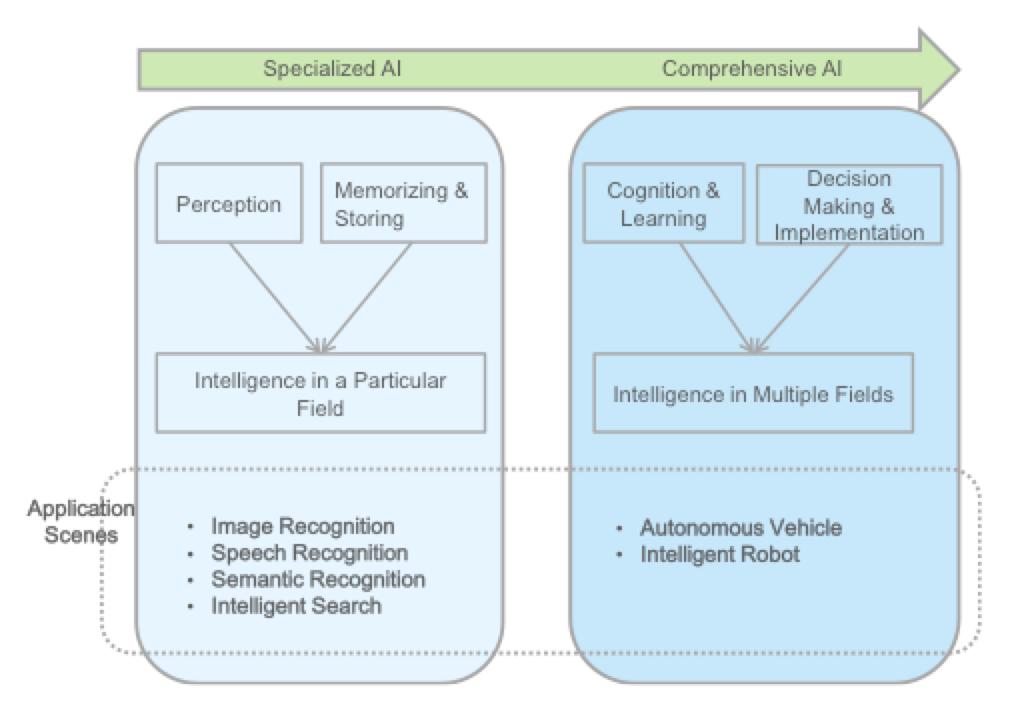

According to the degree of specialization, as shown in the below figure, AI can be divided to specialized AI and comprehensive AI.

· Specialized AI: only capable of realizing elementary and role-based tasks;

· Comprehensive AI: capable of human-level tasks and involving continuous machine learning.

Figure 1.2.2-1

In view of the current application scenes, AI is still “specialized” as being focused on particular field of application, however, it will eventually evolve into comprehensive AI in the future along with significant increase in computing capacity and data volume as well as improvement in algorithm in future.

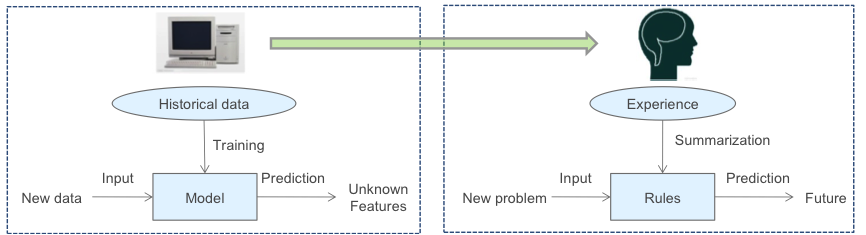

1.2.2.1 Machine Learning

Machine learning is a branch of artificial intelligence focused on the study of how to design, analyze and improve automatic learning algorithm. Here, "automatic learning" means an algorithm with which a computer can, like human brain, find rules automatically through data analysis and predict unknown features according to the rules, without the need of traditional programming based on recognition of certain features beforehand.

Figure 1.2.2.1-1

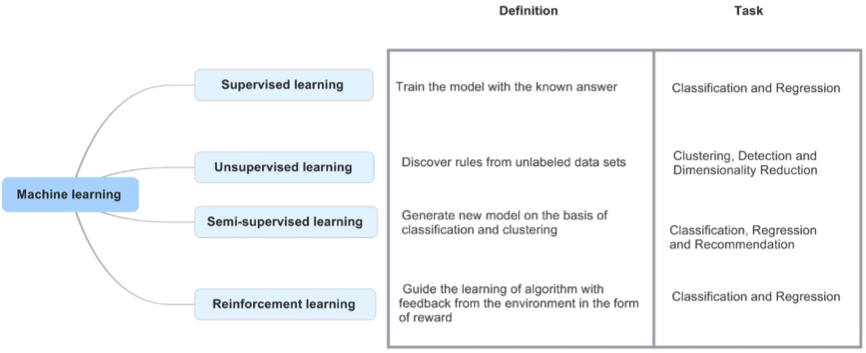

In the field of machine learning, there are mainly four types of learning methods:

Supervised Learning:

Learning from labelled training examples so as to predict data beyond the training set. For supervised learning, all labels are known. Therefore, training examples have low ambiguity. A task is called regression in case of continuous output of supervised learning algorithm, and classification in case of discrete output.

Unsupervised Learning:

Learning from unlabeled training examples so as to discover structural knowledge from the training set. For unsupervised learning, all labels are unknown. Therefore, training examples are highly ambiguous. Clustering is a typical kind of unsupervised learning.

Semi-supervised Learning:

Conducting training and classification by using labelled examples and unlabeled examples. Semi-supervised learning makes use of unlabeled data to develop models with enhanced generalization on the entire data distribution. The entire learning process doesn’t need manual intervention, but solely based on the exploitation of unlabeled data by the learning system itself.

Reinforcement Learning:

Systematic learning by environment to action mapping, so as to maximize functional value of the reward signal (reinforcement signal). Namely, actions are taken after observation. Each action will exert influence on the environment, and the environment in turn will provide feedback in the form of reward to guide the learning of algorithm.

Figure 1.2.2.1-2

1.2.2.2 Neural Network

Neural network is a mathematical model or a computing model that simulates architecture and functions of biological neural network to estimate or approximate unknown functions. Neural network performs computation through large amounts of artificial neuron connections. In most cases, an artificial neural network can change internal parameters on the basis of external information, therefore, it is a self-adaptive system.

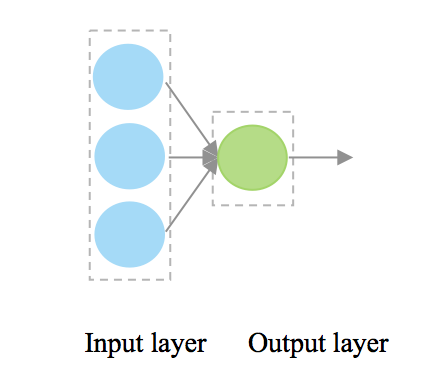

Neural network is mainly devided into Single-layer neural network and Multi-layer neural network, which are separately introduced as follows:

Single-layer neural network:

Figure 1.2.2.2-1

The above Fig. 1.2.2.2-1 shows a single-layer neural network, the most basic form of neural network. It consists of an input layer of neurons and an output layer of neurons, and can solve simple linear problems such as "AND, OR, NOT."

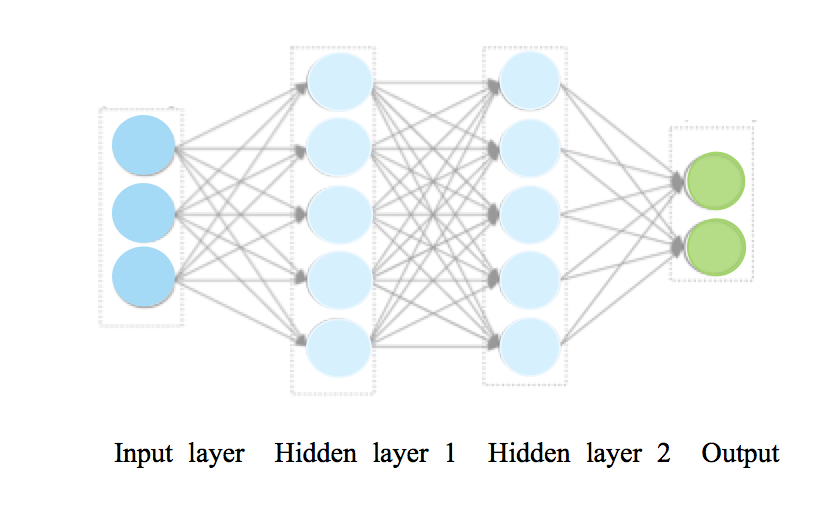

Multi-layer neural network:

Figure 1.2.2.2-2

The above Fig. 1.2.2.2-2 shows a multi-layer neural network, which can be understood as a neural network stacked with single-layer neural networks. The first layer of the neural network (the input layer) uses numeric vectors as input, weights the input vectors via a non-linear activation method, and generates another numeric vector as the input of the second layer of the neural network (the hidden layer 1), and so on. Multiple layers are connected together with appropriate vector dimensions. By using learning algorithms, the network learns statistical rules from a large number of training examples of features in order to predict unknown events, and forms a neural network "brain" to perform precise and complex process.

In multi-layer neural networks, there are many hidden layers between the input layer and the output layer. Each hidden layer can be understood as a feature layer, and similarly, each neuron can be regarded as a feature attribute.

1.2.2.3 Deep Learning

Shallow Learning

Before 2006, machine learning was still at the shallow learning stage. Although the neural network then was called multi-layer neural network, it usually had only one hidden layer, which limits the multi-level learning of features.

Deep Learning

Deep learning acquires associated weights by training a multi-layer neural network structure so that data can automatically obtain more specific meanings through the network, which can be further directly used for graphic classification, speech recognition, and natural language processing. Specifically, deep learning performs high-level abstraction on data using multiple processing layers that contain complex structures or multiple non-linear transformations, which is analogous to the current human brain model, and is in line with the cognitive process of human that organizes concepts hierarchically and performs layered abstraction from simplicity to complexity. Deep learning can simulate the process of human brain of learning, understanding, and even solving the ambiguity in the external environment.

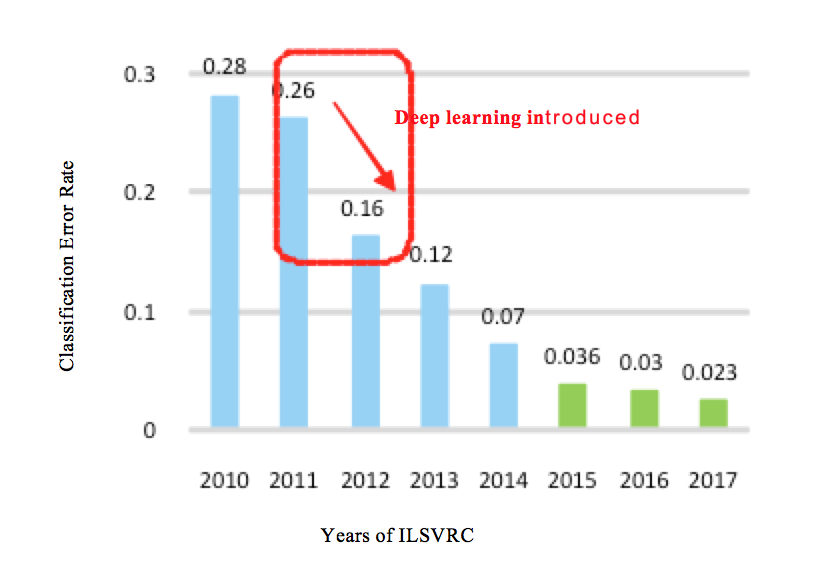

The most impressive illustration of progress in deep learning is the ImageNet Large-Scale Visual Recognition Challenge (ILSVRC), which includes competition items such as classification with localization, image object detection, video object detection, scene identification and segmentation.

Figure 1.2.2.3-1

The above Fig. 1.2.2.3-1 illustrates the changes of classification of error rate with the year goes on. From the above figure, it can be seen that before 2012, the classification error rate in the competition couldn't break through 25%; the classification error rate reduced to 16.4% after the introduction of deep learning in 2012, marking the beginning of the replacement of traditional visual methods by deep model; and the classification error rate of the champion was as low as 3.57% in 2015, which surpassed human (average error rate 5%) for the first time.

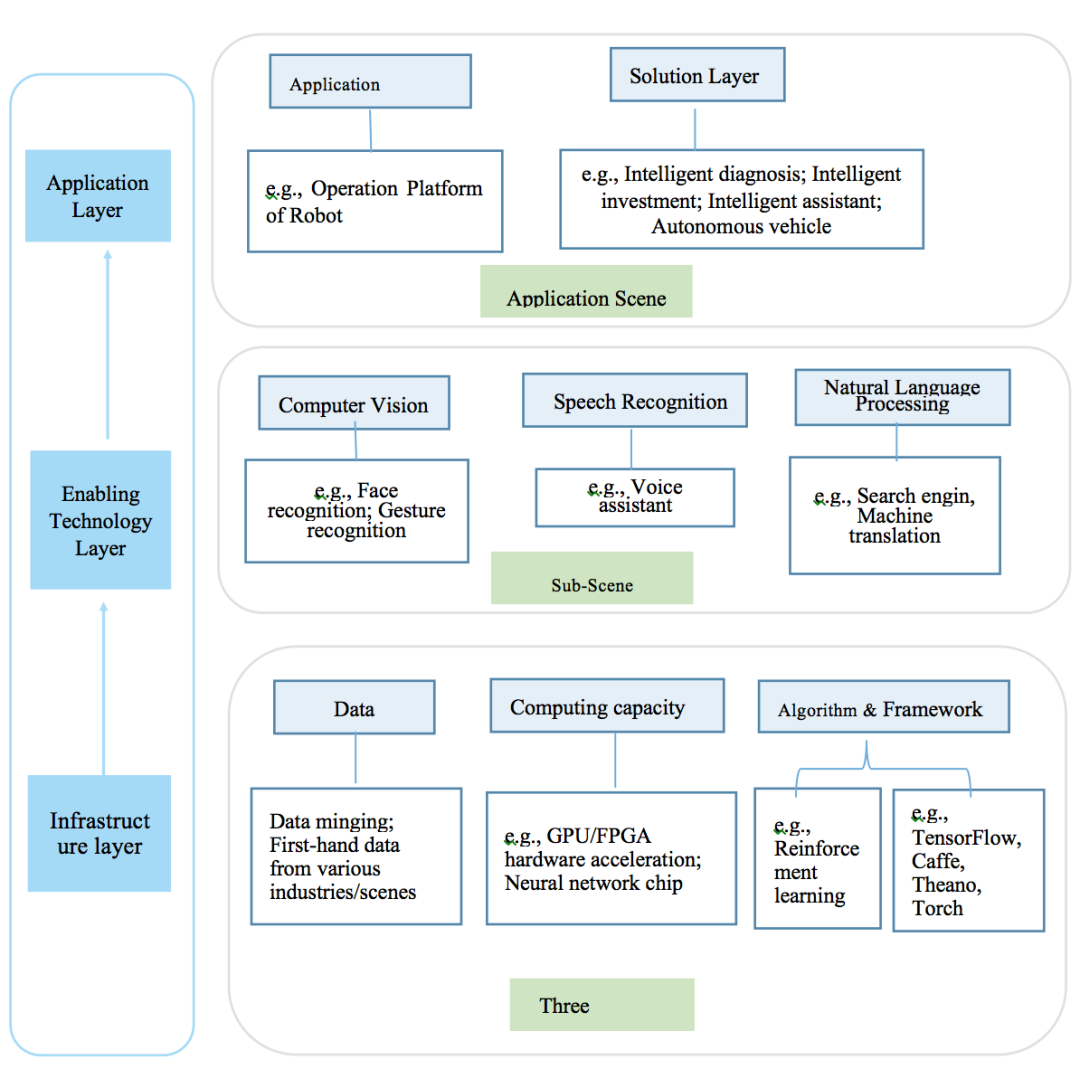

1.2.3 Hierarchy of AI technology

Speaking of the technical hierarchy of AI, it can be divided into three layers, an infrastructure layer, an enabling technology layer, and an application layer from bottom to top. The infrastructure layer is the nearest to the "cloud", and the application layer is the nearest to the "terminal," as shown in the below Fig. 1.2.3-1.

Figure 1.2.3-1

Infrastructure Layer

The Infrastructure layer is the core carrier of AI, wherein the computing capacity, data and algoriths (& framework) is the three elements for supporting the AI industry development.

|

Computing capacity |

Providers of computing capacity of hardware acceleration chips and neural network chips such as GPU/FPGA for Big data, cloud computing, and etc. |

|

Data |

First-hand data from various industries and scenes, such as identity, medical, shopping, and traffic information |

|

Algorithm & Framework |

Frameworks or operating systems such as TensorFlow, Caffe, Theano, Torch, DMTK, DTPAR, and ROS and the like Various algorithms for deep learning |

Enabling Technology Layer:

The enabling technology layer is alsoc called as general technology layer, and is built upon the infrastructure layer, the most basic technologies of which include computer vision, speech recognition, and natural language processing.

|

Computer Vision |

Computer vision means that the computer replaces the human eyes to identify, track and measure objects, and processes images to make them more suitable for observation by human eye or being sent to instruments for detection. Computer vision recognition can be further divided into three categories: object recognition, object attribute recognition, and object behavior recognition. Object recognition includes character recognition, human body recognition, and item recognition. Object attribute recognition includes shape recognition and orientation recognition. Object behavior recognition includes movement recognition, gesture recognition, and behavior recognition. |

|

Speech Recognition |

Speech recognition is a technology that converts voice signal into corresponding text or command after the machine automatically recognizes the language spoken by human through signal processing and recognition technology. Voice interaction technology, which combines speech recognition, speech synthesis, natural language processing and semantic network, is gradually becoming the main approach of multi-channel and multimedia intelligent human-machine interaction. |

|

Natural Language Processing |

Natural language processing means computer-based simulation of human language communication process, enabling the computer to understand and utilize the natural language of human society so as to achieve natural language communication between human and machine, thus replacing a part of mental work of human beings, including data looking-up, problem solving, document excerpting, material compilation, and all processing related to natural language information. |

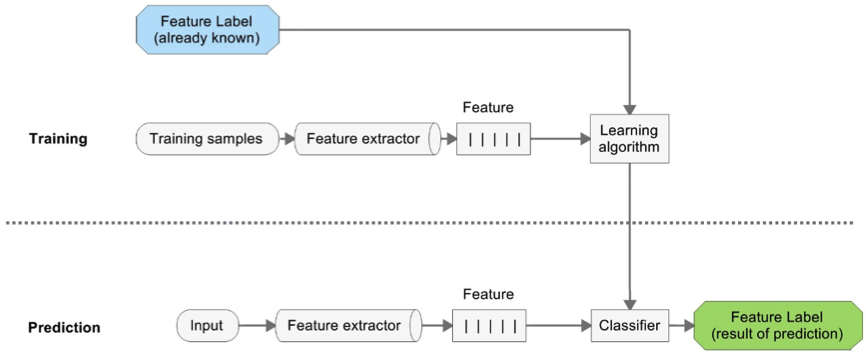

Computer vision, speech recognition, and natural language processing have a common architecture in technical process, particularly, their processes could all be divided into two parts, i.e., training and prediction.

For classification tasks, the training part is to select and extract features from training samples by the use of learning algorithms so as to train a classifier; and the prediction part is to detect, determine, and filter features from inputs by using the trained classifier.

Figure 1.2.3-2

Application Layer

The application layer is built upon the enabling technology layer. It includes application platform and solution, as shown in the below table.

|

Application platform |

Develop various types of application platforms by differentiating combination of technologies. Examples include industry application distribution and operation platform, robot operation platform, and robot vision open platform. |

|

Solution |

Develop a large number of sub-scene applications based on scenes or industry data. Examples include applications in various scenes such as intelligent advertising, intelligent diagnosis, automatic writing, identity recognition, and intelligent investment advisor, smart assistant, and autonomous vehicle. |

1.3 Development Trend of AI

1.3.1 Bottlenecks and Upsurge

According to McCarthy’s classification, if the rise of machines in Cybernetics is excluded, the discipline of modern AI is at the second upsurge of development. No discipline like AI has experienced such violent ups and downs in a short period of decades. Every decline of AI is because the expected goals of science and technology hit a bottleneck, while every rise of AI is because new technical means emerged solve the problems that could not be solved before. For example, in this wave, deep learning has solved the problems related to voice and image that neural networks could not solve before. Later, just like “Deep Blue”, which has defeated Kasparov in people’s memory, as a landmark event, AlphaGo with reinforcement learning as its core algorithm has defeated Lee Sedol and Ke Jie. Another example is logicism. The progress of knowledge graph has also gone beyond the domain of expert system.

The developers of AI are constantly answering the hypothetical questions that previously answered by philosophers and science fiction writers, some of which are AI-related questions and ultimate questions concerned about by the general public. The means of science and engineering are making it possible to answer these questions, but people’s high expectations do not necessarily lead to economic bubbles. If ranking based on the degree of contribution to the development of AI technology, the research should still focus on “computing power”, “data” and “algorithms”. Without sufficient computing power, there will be no means to process massive data. Besides, many algorithms relies on certain specific hardware. The improvement of the computing power to a certain critical point can make the corresponding learning algorithm possible.

1.3.2 Forecast and Trend

Entering the third decade of the 21st century, the development of AI technology and its solutions at the application level is increasingly matured, the AI model enterprises and the AI data enterprises across the world are integrating AI modules together, making the “industrialized” production and application of AI possible, helping the achievement of the ultimate goal of empowering upgrades and transformations in various industries. This is particularly reflected in the application of AI solutions in such aspects as finance, healthcare, and education.

AI Hardware

The increasing maturity of AI chip has enabled it to enter the preparatory stage for commercialization. Low cost, specialization and system integration make the neural network processing unit (NPU) one of the basic modules in the next-generation terminal-side CPU chipset. In the future, more and more terminal-side CPU chip designs will make new chip planning with compatible deep learning as the core support. In addition, the resulting computer architecture, new heterogeneous design ideas that support AI training and predictive computing will be redefined.

Deep Learning and AutoML

As one of the most effective algorithm technologies generally accepted in the industry in recent years, the open source platform based on the structure of deep learning has greatly reduced the threshold for the development of related AI technologies, and effectively improved the quality and efficiency in the specific application fields of AI. Automatic machine learning has built an automatic learning process for traditional machine learning. Meta-knowledge-based automatic selection of appropriate data, optimization of model structure, and configuration and construction of autonomous training models have greatly reduced the cost and lifecycle of machine learning, making AI applications quickly popularized in various business fields.

5G and IoT

With the development of 5G communication technology and IoT technology, the capability of edge computing will break through the boundaries of cloud computing centers and spread to everything. The AIoT system integrating AI technology and IoT technology will allow the IoT to carry out automatic learning and perform tasks without the involvement of humans, providing the best user experience in almost all vertical industries (finance, manufacturing, medical, retail, etc.). AI will appear as a service in all walks of life and everyone’s life.

Quantum Computing

Neither “supercomputing” nor “super intelligence” can be achieved in this dimension (namely, knowledge and computing power) in any near future, and the “singularity” of AI still seems to be out of reach. However, “quantum computing” may contribute to a new round of development of AI. Programmable intermediate-scale noisy quantum computing equipment is basically ready for error correction, and will eventually be able to run quantum algorithms with certain use value. This will greatly help the practical application of quantum AI.

Standards and Ethics

As we can see, the fifth generation (5G) communication technology has been successfully developed, and the sixth generation (6G) is expected. However, no standards for AI technology have ever been drafted. In fact, with the outbreak and spread of the COVID-19 epidemic, all walks of life are faced with many challenges and pressures. A great number of employees work remotely from home, and people seek for improving the user experience of stakeholders with AI. Therefore, international partnerships such as the global AI partnership have been unveiled, showing a focus on questions such as “how to ensure that AI will be used for solving major global issues, and how to ensure inclusiveness and diversity” and “fairness of consistent algorithm and transparency of data”. In the meantime, AI-related ethical issues have also become the focus of discussion.